Blame the bots? We’re relying more and more on algorithms to regulate our lives – but what happens when they get it wrong, sometimes with catastrophic results?

[TW: Eating disorders]

We saw this play out when Google’s algorithm breached the name suppression of Grace Millane’s alleged murderer. This not only broke the law, it potentially put other people’s safety at risk.

And what did Google do? Well, they said, they never received a court order, so they didn’t have to take action. In other words, they abdicated responsibility. And by then, it was too late.

This ‘algorithmic abdication’ sets a dangerous precedent. Plenty of international companies trade in New Zealand with no local law – see Facebook’s resistance to paying taxes for another prominent example.

We benefit from the world’s ever-growing global marketplace, but when companies with very little to lose, especially in New Zealand’s relatively minuscule economy and customer base, refuse to respond to safety concerns – do the benefits outweigh the risks?

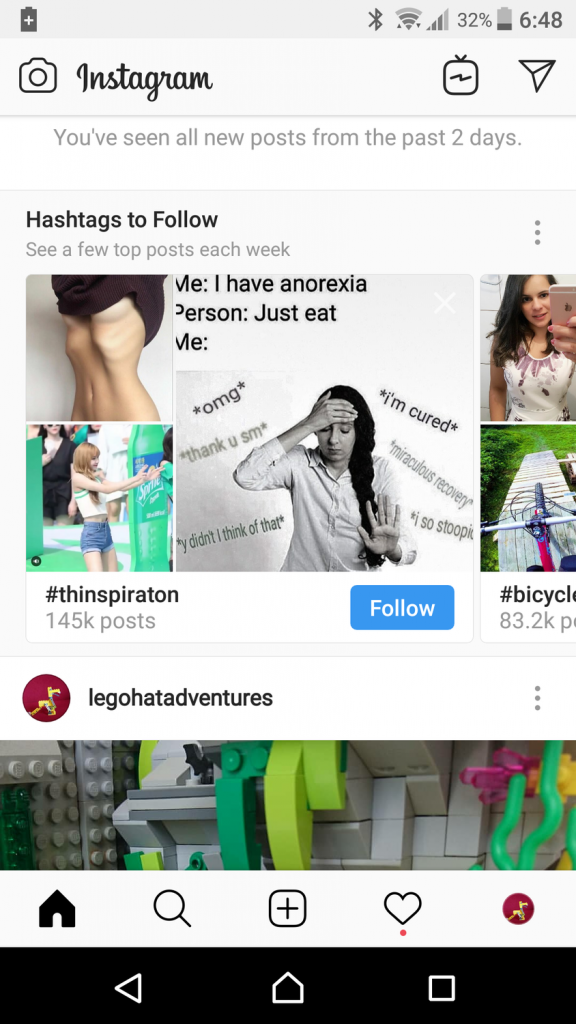

Late last year, a strange and very unwelcome thing happened to my friend Tama on his Instagram. His account solely is used to post pictures of the Lego he makes with his son. But apropos of nothing, the tag #thinspiration was promoted into his feed.

I did a bit of research. #thinspration is a “shadow banned” tag – if you search for it, it won’t come up. But if you’re already following people that use it, it will. And will it ever. There’s a reason that Instagram is known for being the chosen channel of models, B list celebs, yoga gurus and other “fitness” fanatics. Health and fitness is, of course, not banned – but there’s a conversation to be had about whether some of the images appearing under those tags should be.

My friend soon discovered that while you can report images and users on Instagram, there’s no functionality to report Instagram to itself. So, he took the liberty of emailing several eating disorder support groups that were listed on the site. Some of them responded with concern and said they would attempt to raise the issue.

In all fairness, it’s hard to see them making much of a difference. Here’s his full account of the story.

Meanwhile, social media giant Tumblr angered users by announcing a ban on all explicit content in December last year. I could see their reasons for doing it, and I could understand the counter arguments. Basically, they said, they’re trying to make the site safer.

Interestingly – you can’t see porn on tumblr – and yet, the “pro-ano” community thrives.

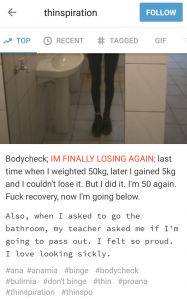

That’s “pro-anorexia” – a tag that people use to promote images of skeletal women, share tips for how to eat less, and push themselves and others towards dangerous weight loss.

This content turns up in my feed, even though I don’t follow the tag or anyone in that community.

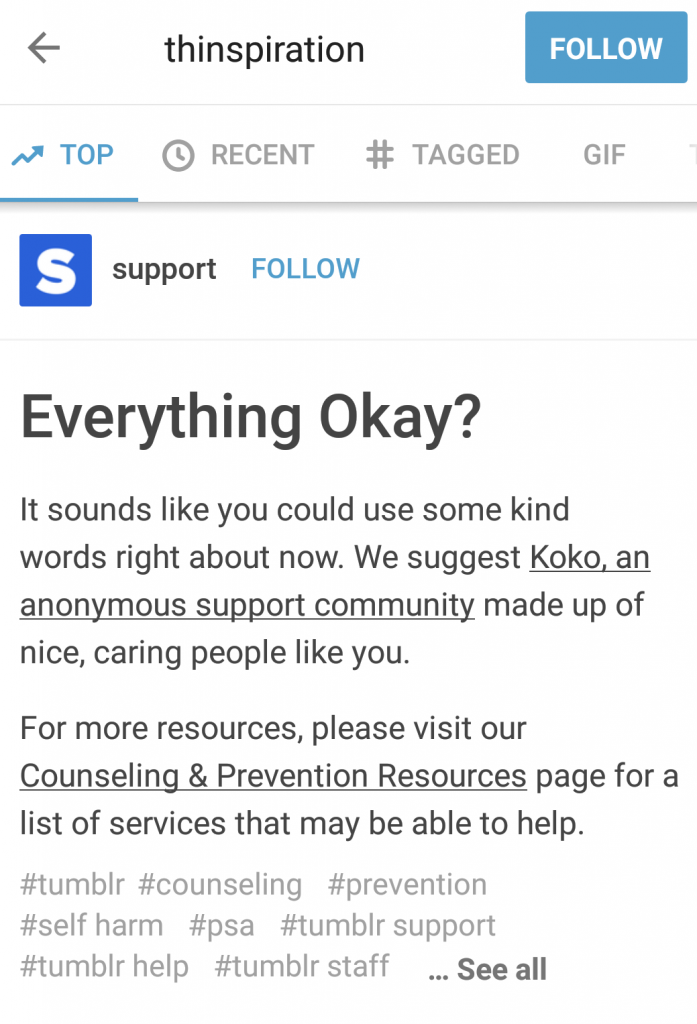

However, if you happen to search #thinspiration on Tumblr, this is the first result to pop up:

Unfortunately, you can ignore that and scroll down to the next results, which get increasingly disturbing. Trigger warnings for eating disorders, body image, mental health on this next bit.

It is so questionable that according to “safety” we’re now not allowed to look at nudes (I know the explicit content ban was much more than that), but there’s free rein with this stuff, which is demonstrably harmful.

I don’t think bots – or the mega media conglomerates behind them – should be responsible for our safety. They’ve proved that their attempts all-too-often go horribly wrong.

As Tama said –

“500 million people use Instagram every day & what those people see or experience has been pretty much left in the hands of bots. Instagram seem to trust their bots so much that there isn’t even a way to report that the bots are suggesting people try starving themselves.

Exactly how much faith are we going to put into bots, without humans checks or balances?

What other tags have the Instagram bots been suggesting to users?

The simple answer is “Don’t use Instagram” – but that doesn’t help the 500 million people who’ll use it today. Or the 13 year old who’s been told to try starving themselves by bots, because the bots know best.”

I can’t put it any more succinctly than that. Advising people not to use social media is next to useless, not to mention unfair. That puts the onus on us – as if it’s our personal responsibility to avoid anything that might be harmful to us. It smacks of the same mentality people use when advising women not to wear short skirts. The skirt isn’t the problem, and it’s not possible to never go outside, just as it’s not possible to never use the internet. And yes – other users are creating harmful content, but it is possible to write algorithms that filter it out based on user settings.

I think it’s possible to find a middle ground where users are given tools and attempt to create safe spaces by curating their feeds and putting warnings on their own content – recognising of course that this is a pretty adult thing to do and many young teens won’t be doing it. I know that I can’t expect to go on the internet and never see anything I don’t want to see. I know I have some options for carving out places that are safer and happier for me, and I exercise those.

I’ve struggled to find the right way to end this post, because I haven’t reached a clear, one-sentence black-and-white conclusion.

The internet is still so much of a wild frontier. We’re all responsible for it, especially the behemoths that control such large swathes of our experience.

We could start with demanding they be far more accountable.